Metadata

- Author: Crystal Widjaja

- Full Title:: Why most analytics efforts fail

- Category:: 🗞️Articles

- Document Tags:: Data culture, Data Team Vision and Mission,

- URL:: https://www.reforge.com/blog/why-most-analytics-efforts-fail

- Finished date:: 2024-03-29

Highlights

Beyond all of the tooling, there is one foundational thing that makes or breaks any data initiative within a company - how you think about what to track, how to track it, and manage it over time. If you get these things wrong, the best tooling in the world won’t save you. (View Highlight)

Instead, the root cause typically stems from one or more of the following:

- Tracking metrics as the goal vs analyzing them.

- Developer/Data mindset vs a Business User mindset.

- Wrong level of abstraction.

- Written only vs visual communication

- Data as a project vs ongoing Initiative (View Highlight)

Tracking Metrics As The Goal vs Analyzing Them Many teams view the goal of data initiatives is to track metrics. The real goal though is to analyze those metrics. Those two things are very different. The latter is how we make information actionable. Making information actionable isn’t about reporting on the number of people that do something, it is about how we separate what successful people do vs what failed people do in our products so that we can take steps towards improvements. This nuance is commonly lost, but as you will see fundamentally changes how we approach what we track and how we track it. (View Highlight)

At Gojek one of the keys is that most of our business users were not SQL analysts. Instead, they were non-technical product managers, marketers, or operations managers. (View Highlight)

New highlights added 2024-03-31

The true test for us - can someone in our operations/customer care team access the event tracking tool & UI platform and use it independently without a full onboarding session? The operations/care team were the least educated on the market, and most distant from the product development process. If they were able to analyze questions, then it was likely the rest of the team could as well. (View Highlight)

Bad tracking is when our events are too broad, good tracking is when our events are too specific, great tracking is when we have balanced the two (View Highlight)

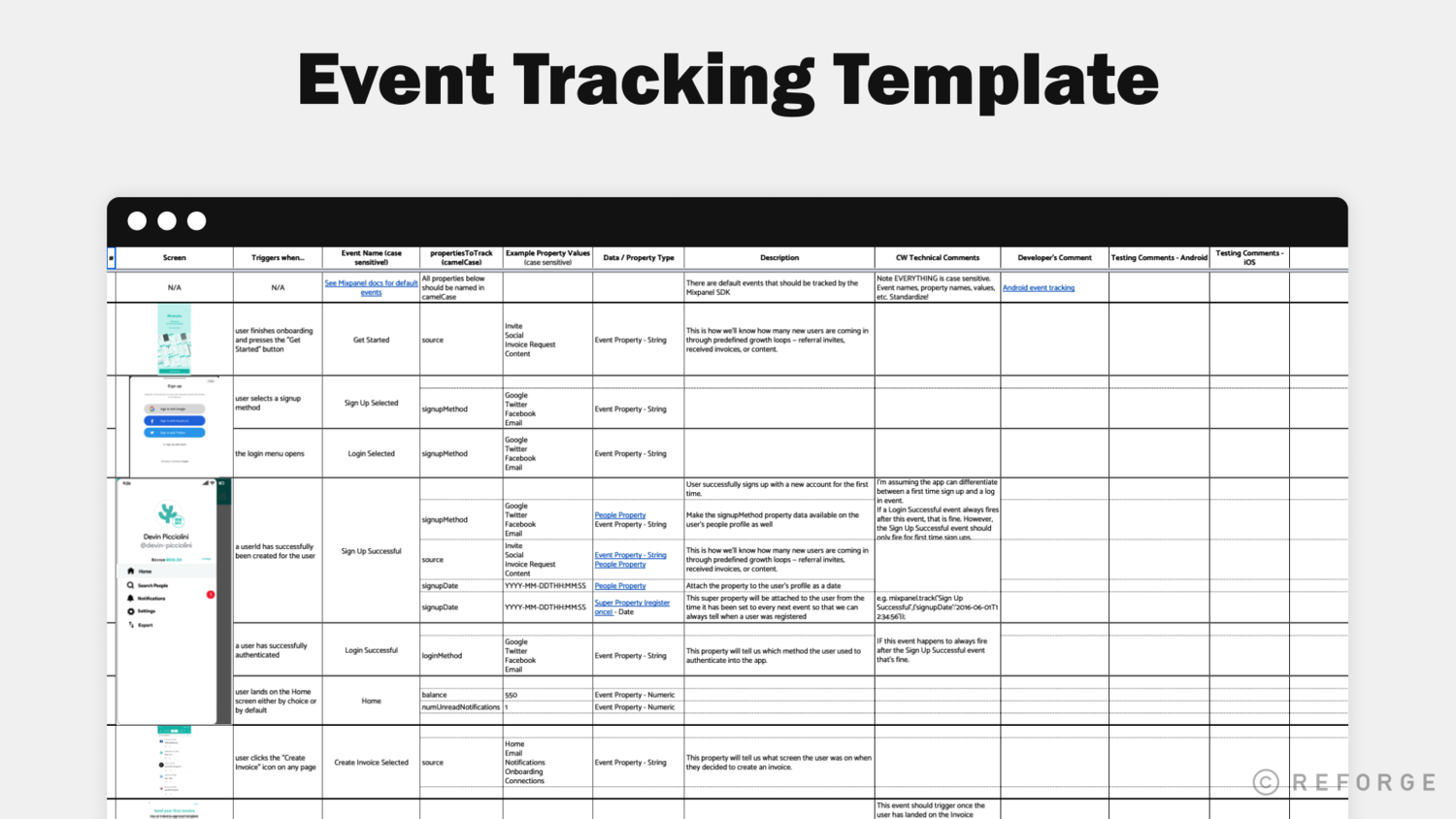

including screenshots of what is happening in the product when an event triggers. Two, mapping events to visual representations of customer journeys. (View Highlight)

At a minimum, the dictionary needs to be simple and easy to understand. Things like clear naming conventions are the basics. But the true test is if someone entering the company for the first time is able to look at the Event Tracker and quickly map the events to their actions in the product with out needing to read every event’s definition. (View Highlight)

Bad signals: • There is a single person who knows how to do tracking — no one else knows how to write the event specs themselves (View Highlight)

Good signals: • Lots of teams are using the event tracking sheet and analytics tool (track usage of your tools like you track usage of your product) (View Highlight)